![[S]](img/logo.png)

An informal introduction to PPETP

The goal of this page is to give you an informal introduction to PPETP: how we developed it, what kind of problems is designed to solve, how it works and why is it so cool...

Please note: This page (as the rest of this site) is still under construction and some parts are still missing. Please, be patient...

Index

- The original problem: live streaming to large audiences

- P2P streaming: the sweet and the sour

- The chuncky approach

- The PPETP approach

- PPETP features

What is PPETP and why did you developed it?

PPETP is the acronym Peer-to-Peer Epi-Transport Protocol and it can be considered as an overlay multicast protocol based on a peer-to-peer structure. In order to understand better the peculiarities of PPETP, it is worth to tell the story behind it.

The original problem: live streaming for large audiences

Our adventure in the field of P2P streaming started more or less at the end of 2007, when we were told about the problem of streaming good quality live video to a large number of users (the typical example given for this is the F1 race that can be seen by millions of users at the same time).

Although a large audience is what a TV producer most desires, the problem of streaming video over Internet to such a large number of user stems from the following formula

Since good quality video can require few Mbit/s of bandwidth, the total bandwidth required to stream to few millions of users can easily reach very large values (~1012 bit/s), requiring important investments to the TV producer.

This problem can repropose itself, with smaller figures, even in other contexts. Consider, for example, a small community (e.g. a political party or a fan club) that wants its own TV on Internet to send video content to the community members. If the community has 100-1000 members (that could be, for example, for a medium-large fan club), the bandwidth required to stream to all the community can reach the order of Gbit/s, that, although within current technical possibilities could be too expensive for the community.

An "easy" solution to this scalability problem would be

of a Content Delivery Network (CDN) where several

servers share the burden of serving the user community. However,

the amount of produced bandwidth remains the same, only

"spread" among different servers. Moreover, if the number

of users doubles, the number of servers must double too if the

server load must remain the same.

A more efficient solution would be the use of multicast.

Unfortunately, using IP multicast over a

distributed area (especially across different

different Autonomous systems) introduces several

technical difficulties.

P2P streaming: the sweet and the sour

An approach

that is considered promising is the use of a peer-to-peer network

where each user retransmits to other users the received data. With

this approach (that I like to call the fire brigade

principle), each user contributes to the data distribution and

the server "ideally" would just need to “seed”

the first few peers and the network would take care of itself.

An approach

that is considered promising is the use of a peer-to-peer network

where each user retransmits to other users the received data. With

this approach (that I like to call the fire brigade

principle), each user contributes to the data distribution and

the server "ideally" would just need to “seed”

the first few peers and the network would take care of itself.

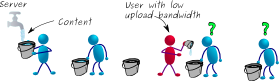

Problem solved? Not at all! Unfortunately, residential users (the most common user in an IPTV context) have several characteristics that make P2P video distribution not trivial

- Asymmetric bandwidth

-

The typical residential user is connected to Internet via an ADSL connection that has few Mbit/s of download band (sufficient to receive fairly good video), but only few hundreds of Kbit/s in upload. Therefore, the typical user has not enough bandwidth to retransmit the received video.

- Sudden departures

-

When a user stops watching a given program and leaves the network, the data flow that the user was sending to other nodes will stop too and this can happen at anytime. Even worse, the node can suddenly crash and the nodes that were depending on it will discover about the crashed node it because they will stop receiving data. Unless suitable countermeasures are taken, this would result in a poor perceived quality, usually in the form of video “freezes”.

- NATs and firewalls

- The typical residential user has not a public IP address, but it is connected to the Internet via a NAT (and often two: one is inside the ADSL router and one is used interfaces the internal network of the ISP to the public Internet). NATs are fairly transparent to client-server application such as e-mail or web browsering, where the node behind the NAT does the first contact; but they make difficult to contact in the opposite direction. Even worst, there are several types of different NATs around the network, so a solution that works in every possible case is necessarily quite complex (see, for example, the Interactive Connectivity Establishment (ICE) protocol described in RFC 5245).

- Security issues

- Not every user can be trusted and by the law of large numbers

one can bet that in an huge crowd of users there will be at least

few users that would like to do some &ldquot;practical

jokes” on the rest of the network. This is not the place

for a detailed analysis of the security risks in P2P streaming; we

recall here just few issues that are specific for this applicative

context

- Stream poisoning

-

The user “inject” in the stream some bogus content that could be both data that cannot be decoded or even an alternative content that the user wants to distribute. This will cause an incorrect decoding in the users receiving the bogus content. Even worst, the content will spread to the whole network carried by the P2P mechanism.

- Defamation

- If countermeasures are taken in order to avoid stream poisoning, a user could inject bogus packets pretending to be another user (the “victim”) so that the victim is punished.

- Denial-of-service

- Several types of Denial-of-Service attack are possible. For example, one could request lots of data from a single node, saturating its upload bandwidth. Alternatively, one could redirect the traffic of many nodes toward a single “victim” node, “drowning” in data. If these types of attacks are practical, it depends on the P2P structure employed.

How does it work a P2P network for streaming?

Giving here a complete taxonomy of the available solutions for P2P streaming would bring us too far... Moreover, such a taxonomy could become obsolete very soon since streaming over P2P is currently (December 2010) a very hot topic. However, it can be said (maybe oversimplifying a little) that most of the structures for P2P streaming are inspired to P2P solutions for file sharing. In a file sharing context a file is typically partitioned in smaller units called “chunks”. A node that desires to download a specific file would search for other nodes that have portions of the desired file and ask them chunks. By asking different chunks to different peers, a node can achieve a good download speed, even if each single peer has a small upload bandwidth. Typically peers in a file-sharing P2P network exchange information about chunk availability, so that a node can find who has a given chunk.

The description above is very rough. Depending on the specific P2P system, many details can be be different. Nevertheless, the description above suffices for our goals.

The chunky approach works fine for file sharing (where the file is static) and it can be interesting for a video on demand (VOD) context, where each user keeps on its disk the files relative to previously view videos.

However, in the live streaming context the application of the chunky approach can be more problematic. Maybe one of the major difficulties is that in the case of live streaming, too old chunks are useless and future chunks do not even exist... The most intuitive solution to this problem is the use of a buffer where chunks are stored, waiting to be played. In this way the “playing instant” is an instant in the past and there is time to achieve from other peers the chuncks relative to times between the playing instant and current time. Unfortunately, this “time shift” requires a buffer filling and this causes long starting times. The startup delay problem can be reduced somehow by some “smart tricks” such as commercials, introductory tunes or variable speed playing.

And your solution...?

The funniest part of the story is that we (the DSP group at the University of Udine) are not "network people", but "signal processing people", with a preference for video coding. At the time when we discovered about the problem of live streaming to a large audience, we were doing research about multiple description coding (MDC), a technique of robust coding that achieve robustness by splitting the multimedia content into several independent streams (known as descriptions) with a smaller bandwidth and with the property that each description can be decoded by itself to produce a low-quality version of the original content, but if one has all the descriptions can obtain a full-quality version.

When we heard about the live streaming problem, we decided to apply a principle similar to MDC: split the original multimedia stream (called content stream in PPETP jargon) into many streams (called reduced streams), where each stream requires a lower bandwidth (say, a bandwidth R times smaller) and such that the following R-recovery property holds

The tricky part is to find a way to split the original content stream so that the R-recovery property is satisfied. The default PPETP reduction scheme uses quite common an approach based on Reed-Solomon codes. If you do not know what Reed-Solomon codes are or you do not know how they can be used in a reduction scheme, I suggest you to read this.

For the sake of convenience, PPETP operates said reduction on a packet-by-packet basis, that is every content packet is processed in order to reduce its size of a factor R, so that the corresponding stream will require a bandwidth R times smaller.

This is a good place to introduce some more PPETP jargon. If a node A sends a reduced stream to a node B we will say that A is an upper peer of B and that B is a lower peer of A. This nomenclature is inspired to a picture where data flows from top to bottom.

In order to receive the content stream a node

- It contacts at least R peers (we will see that it can be convenient to have more than R peers) and asks them to send their streams of reduced packets

- As soon as it receives R reduced packets, it recovers the

content packet and

- Sends the recovered packet to the application

- If the node has some lower peer, it reduces the packet and sends the result to its lower peers

Why is your approach so cool?

Employing reduction functions has several interesting consequences

- Bandwidth reduction

- The upload bandwidth can be easily adapted to the node capabilities. The bandwidth required to nodes with small upload bandwidth can be as small as 1/R of the content bandwidth (for nodes with even smaller bandwidth puncturing can be employed).

- Reliability

- To counteract the risk of packet losses (due to network congestion, peer leaving or other reasons) the node can request data to N > R peers and it will be able to recover the content as long as it receives at least R reduced packets out of N.

- Counteracting poisoning

- To counteract the stream poisoning attack it suffices to receive data from N > R peers, recover the packet using R reduced packets and check that the remaining reduced packets are coherent with the reconstructed packet. It is possible to show that if the check is positive, the reconstructed packet is equal to the original packet even under a coordinated attack from at most N-R peers.

Anything else?

The reduction procedure is the original "core" of PPETP. During these years PPETP evolved and now is a fairly complete protocol with several interesting features, among which

- Built-in NAT traversal

- Residential nodes are often behind NATs and this makes it difficult their partecipation in P2P networks. PPETP provides NAT traversal tools based on ICE (RFC 5245)

- Security feature such as packet signing

- P2P networks can be subject to several threats. PPETP provides has some built-in tools that can be used to make the network more secure

- Built-in congestion control

- Since PPETP communication is done over UDP, it is necessary to verify the insorgence of congestion and reduce the transmitted rate (if necessary). PPETP provides built-in congestion control mechanism and packet priority classes that can be used to improve the overall QoS even in presence of congestions.

- Puncturing

- Consider the following case: a residential

node with small upload bandwidth (say, 256 kbit/s) partecipates in

P2P network used to distributed very good quality content (say 4

Mbit/s). If we want this node to contribute to data distribution,

we need a reduction factor not smaller than

(4 Mbit/s)/(256 kbit/s) = 16 Such a reduction factor can be in some cases too large and one would like to employ a smaller reduction factor. In order to solve this problem, PPETP can ask a node to puncture a reduced stream transmitting, for example, one packet every three.

This site is still under construction. Please, be patient...

This site is still under construction. Please, be patient...